Table of Contents (click to expand)

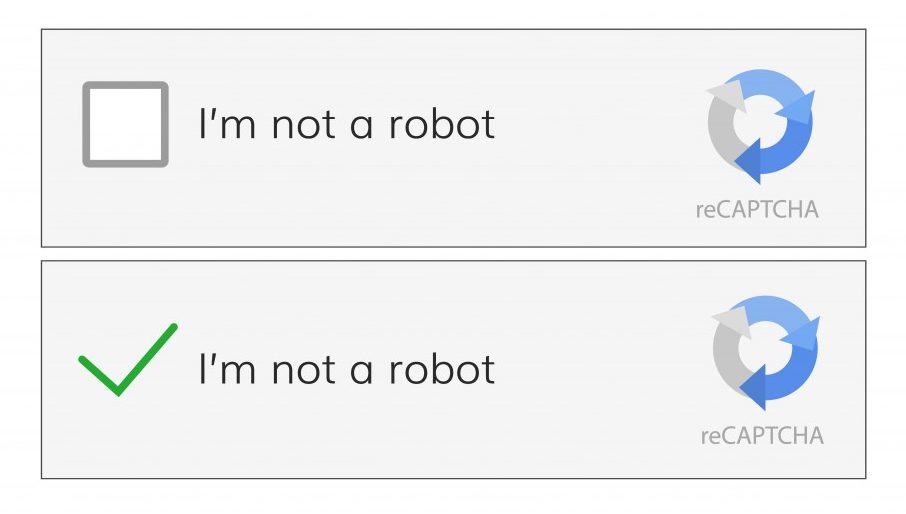

“I’m not a robot” is a version of reCAPTCHA and uses various cues to determine if the user is a human or a bot. It is far more effective than previous methods of CAPTCHA, which used distorted text that users would have to transcribe, as modern bot programs are now able to decipher such text with 99.8% accuracy.

The Internet has made life so easy. Everything you want is just a click away, easily accessible from the depths of your comfort zone. Want to restock your housing supplies? Go to an e-commerce site and click your way through the itinerary. Want to send money without moving an inch from your chair? Use the net-banking services of your bank. Want information about any niche hobby that you care about? Just peruse a range of blogs catered specifically to your tastes.

However, as it always happens, there are advantages and drawbacks to every revolutionary technology. In the case of the Internet, one of the many concerns in managing a digital infrastructure is unsolicited access to websites by bots.

Ranging from financial fraud to emptying the supply of goods provided by an e-commerce website, bots can create havoc. It has become necessary to develop ever-advancing ways to identify who is actually accessing a website; a warm-blooded, flesh and bones human or a cold, script-written bot.

The most common way this is done today, which I’m sure you may have across (unless, of course, you are living under a rock!), is the reCAPTCHA or the single click that differentiates you from a bot.

But how can simply clicking a box make you pass the human test? How effective is this method anyway? Let’s go down the bots vs human rabbit hole!

Why Do Websites Need To Test If You Are A Bot?

As stated earlier, the Internet is not the ideal place we once imagined it would be. It is filled with bad actors who want to take advantage of lapses in the digital infrastructure and use them to fulfill their malicious intent.

Bots can be trained to do all kinds of harm. Bots can create multiple accounts on social networking platforms and email providers (Gmail), thus inflating the number of users and creating havoc elsewhere on the internet with these email accounts. They can fill in forms with unwanted content and spread—you guessed it!—spam. This also applies to comments on websites and other platforms. They make it difficult to gauge the actual human interaction with a platform or website.

Then there are scrapers who use bots to collect email ids of users and use them for all sorts of malpractice. Hackers can use ‘dictionary attacks’ to go through every word in the dictionary to crack passwords, so your passwords aren’t all that safe either. That’s why you see an ‘I’m not a robot’ test when logging in to so many websites. Bots are also used to leave positive reviews and 5 stars on products and services, creating a false image of them.

To circumvent the plethora of these issues, a check is required to differentiate between a legit user and a bot. This is where CAPTCHAs come into the picture.

Also Read: Bot Or Not: How To Tell A Bot From A Human

The Birth Of CAPTCHAs

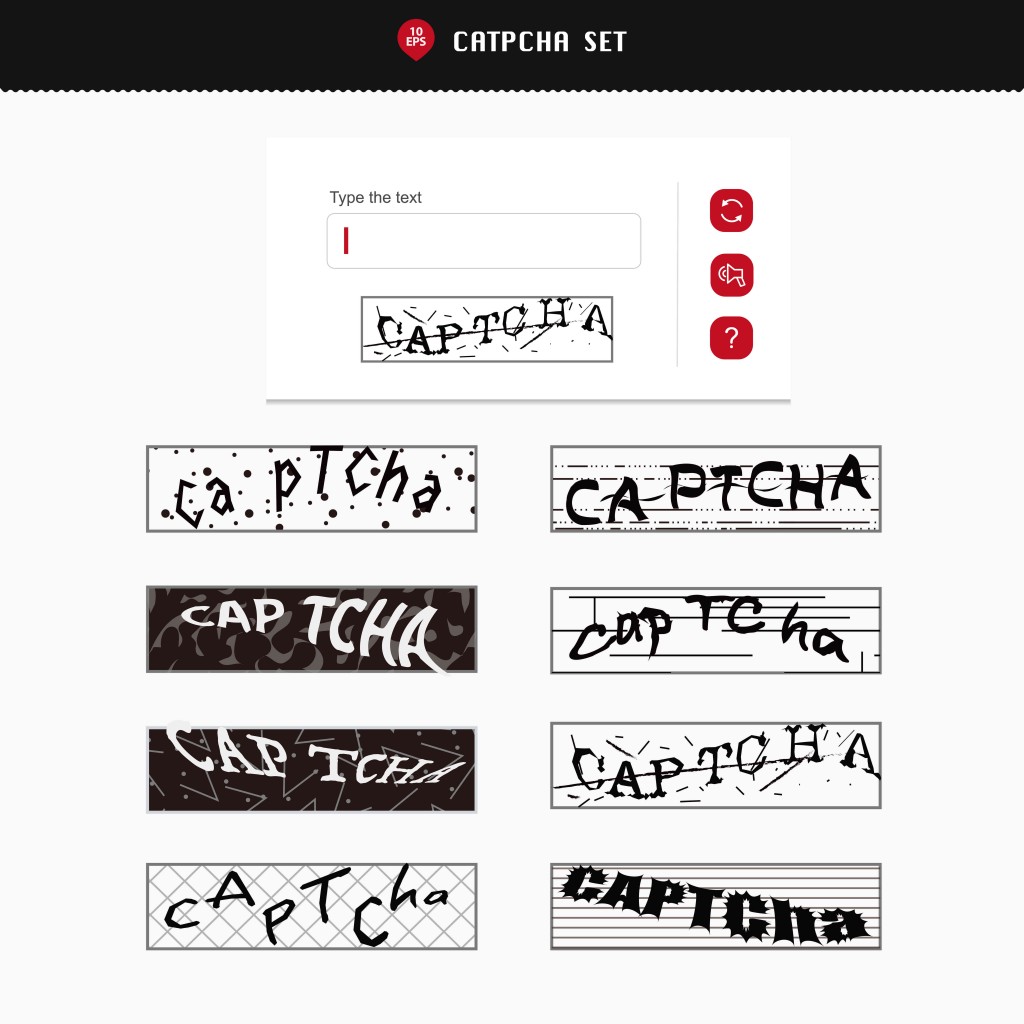

CAPTCHA, short for “Completely Automated Public Turing test to tell Computers and Humans Apart”, was developed by scientists and professors from Carnegie Mellon University and IBM in 2000. It was a way to filter out unwanted bots from websites by using distorted images, puzzles, audio transcription, etc. This method has been used to monitor credit card fraud by PayPal.

The premise of this method is that programs find it hard to decipher distorted visuals, whereas humans can easily decode them. At one point in time, this CAPTCHA method was being used by 200 million users every day, which amounts to spending approximately 500,000 hours transcribing scrambled text! The experts at CMU decided to turn all this effort into something useful and used this method of bot detection to digitize classic books.

This new method was called the ‘reCAPTCHA’ and it used scanned pdfs, books and other materials as distorted tests to make the user transcribe them, which solved two problems—eliminating bots and digitizing classic books.

This spin-off of the CAPTCHA technology was acquired by Google in 2009 and has been further developed by the company.

On April 14th, 2014, Google released a scientific paper stating that it had developed image recognition systems using Deep Convolutional Neural Networks that could transcribe numbers and texts from its Street View Imagery. This meant that programs were now capable of solving the hardest CAPTCHAs with 99.8% accuracy, which made the current system unreliable.

Still, the problem of bots remained prevalent and we needed a way to eliminate them. Enter, No CAPTCHA reCAPTCHA.

Also Read: Man Vs Robot: How Do Their Abilities Stack Up?

No CAPTCHA reCAPTCHA

On December 14th, 2014, Google announced that it had developed a new version of reCAPTCHA—the one that is quite ubiquitous today, the ‘I’m not a robot’ click box.

This version does not make the user transcribe the distorted text, but instead figures out with just one click if you’re a human or bot. This method uses the Advanced Risk Analysis backend for reCAPTCHA developed by Google and outlined on a blog post in 2013.

This backend process analyzes the user engagement before, during and after writing the CAPTCHA to validate them, relying on cues for understanding if a user is a bot or a human. The ‘I’m not a robot’ test uses similar methods, while using the way the user moves the cursor and the pattern of filling out the text field as some of the cues. Google doesn’t publish all these cues as it would, obviously, defeat the purpose of restricting bots.

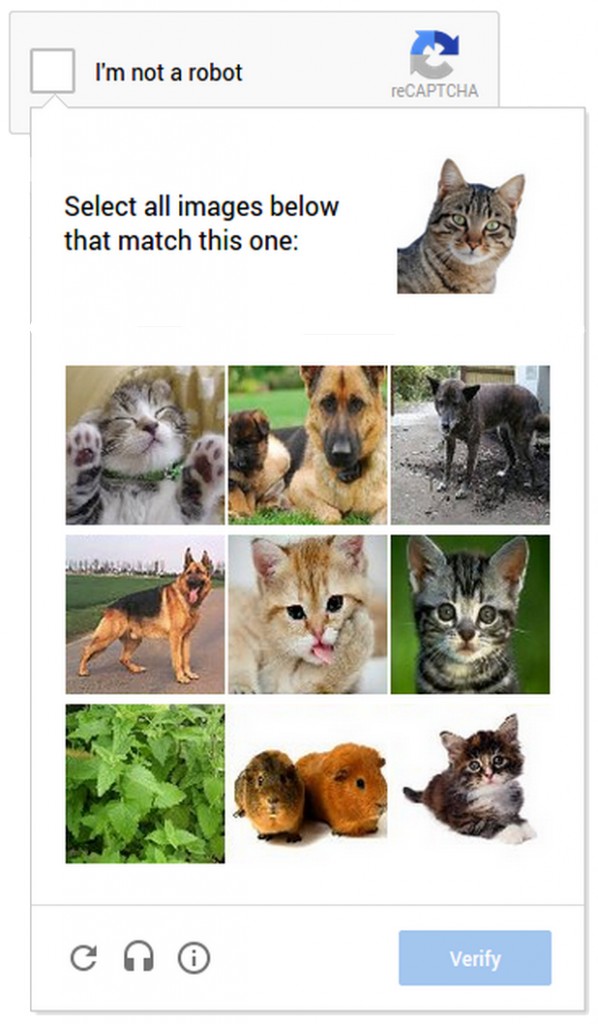

The CAPTCHA hasn’t completely been replaced, however, and is still used with the click-box if Google feels that there is a malicious presence, making it an additional cue upon which it determines the validity of the user. However, the distorted texts have been replaced by images of say, a cat, which the user must identify among other options.

Are “I’m Not A Robot” Checks Effective?

Google states that, upon the release of the new version of the reCAPTCHA, companies like Snapchat, WordPress, and Humble Bundle readily adopted this method. They claim that in the first week of No CAPTCHA reCAPTCHA usage, users moved to the main website much faster than under previous methods.

As for the safety aspects, adding many layers of cues makes it much more difficult to enter a site, which the ‘I’m not a robot’ method clearly aids, as compared to the single transcribing of text in the earlier CAPTCHA methods. Google not releasing the cues keeps bot makers guessing what they might be, ensuring that the reCAPTCHA always has the upper hand.

This method is also a boon for people with visual impairments, as it reduces the time it takes to transcribe and replaces it with just a click and the occasional need for tagging. The ‘No CAPTCHA reCAPTCHA’ could see further development in the future as more cues are added in the algorithm to check the legitimacy of the user.

It’s safe to say that the problem of bots won’t be going away anytime soon, but for the moment, it appears that humans have a lead in the digital arms race against them!

How well do you understand the article above!

References (click to expand)

- Are you a robot? Introducing “No CAPTCHA reCAPTCHA”. security.googleblog.com

- reCAPTCHA - Google. Google LLC

- L von Ahn. CAPTCHA: Using Hard AI Problems For security. The School of Computer Science at Carnegie Mellon University in Pittsburgh, Pennsylvania, US

- reCAPTCHA just got easier (but only if you're human). security.googleblog.com