Table of Contents (click to expand)

Amazon’s Alexa saves the voice profile of users, from which it creates acoustic models that are saved in the cloud. Upon receiving/hearing a new command, it compares the input with the acoustic models and identifies the user.

Speech is the natural mode of communication between people. If you watch a conversation flow, it becomes apparent how effortlessly we can speak, how fundamental it is to the way we are. And rightly so, as it enables the quick transfer of information filled with the rich nuances of language, culture, gestures, and tone.

This mode of input has largely been unused when it comes to human-computer interaction; for most of our communication with computers, we have used keyboards, mouse and touch screens with our thumbs and fingers.

This is not for lack of imagination though, as science fiction has been rife with voiced personas who assist human protagonists, from JARVIS helping Tony Stark build a next-level flying bodysuit in Iron Man to Samantha helping the main character of Her get out of a rut and discover what love truly means.

Although the current state of voice assistance is nowhere near the capabilities of JARVIS or Samantha, we have seen the mass-market adoption of voice-enabled devices in recent years. Among these, the market leader who has annihilated its competition and holds 70% of the market share in the US is the Amazon Echo.

It lets you play music and games, order things from Amazon, set reminders, stream podcasts, make to-do lists, and automate home lights, along with many other functionalities that are added by Amazon or third-party developers who build on top of the Alexa platform.

All of this functionality is powered by your voice alone! The question is, how does Alexa recognize who is giving the commands? Let’s try to understand the context around Amazon Echo to get a better idea of how this behind-the-scenes magic occurs.

Alexa’s Conception

Amazon began developing its voice-enabled smart speakers in its Lab126 office. This is wholly owned by Amazon, is situated in Silicon Valley, and is responsible for its research, development and computer hardware. The Echo device was conceived in 2010 as an attempt for Amazon to broaden its range from its e-reader Kindle.

The device was released much later and the initial sale was invite-only, though it became widely available by July 2015. In the first release, the device was shipped with a remote control, as there was ambiguity between the creators as to whether the speaker alone would suffice to register voice commands. After the first batch of this product was used by consumers, it became clear that the device was adept on its own and the remote control was phased out in subsequent releases.

The wake word (the word that the device is waiting to listen for before registering a command) for the device during the development of the device was ‘Amazon’ and the device was itself called the Amazon Flash. However, the development team felt that Amazon is a very common word, one that is used in conversations and during television commercials, so this could trigger the device unintentionally and make it order something from Amazon. They suggested ‘Alexa’ as the wake word and Amazon Echo as the device name, which seems like a decision that has paid off. That being said, a user can change the wake word to ‘Amazon’, ‘Echo’ or ‘Computer’ if they wish.

The device has been wholeheartedly adopted not only by consumers, but also developers. As of September 2019, the number of skills that Alexa could perform were 100,000, all of which rely on the robust ecosystem that Amazon has built.

Also Read: How Does Apple’s Siri Work?

The Alexa Ecosystem – The Journey Of Your Voice

In January 2019, Amazon reported that it had sold 100 million Alexa devices. Alexa is integrated into many of the products released by Amazon, as well as in third-party products. Amazon has created a platform for voice-enabled devices and third-party skills that developers can use without needing the server space while training Alexa to perform particular tasks.

The Echo itself doesn’t house much of the processing power to recognize commands and fulfill them. It holds an array of microphones with a cylindrical speaker. The processing is done through the mammoth cloud computing infrastructure that Amazon already has in place—AWS (Amazon Web Services). A small computer in the device constantly keeps listening for its wake word, after which it registers the command you give.

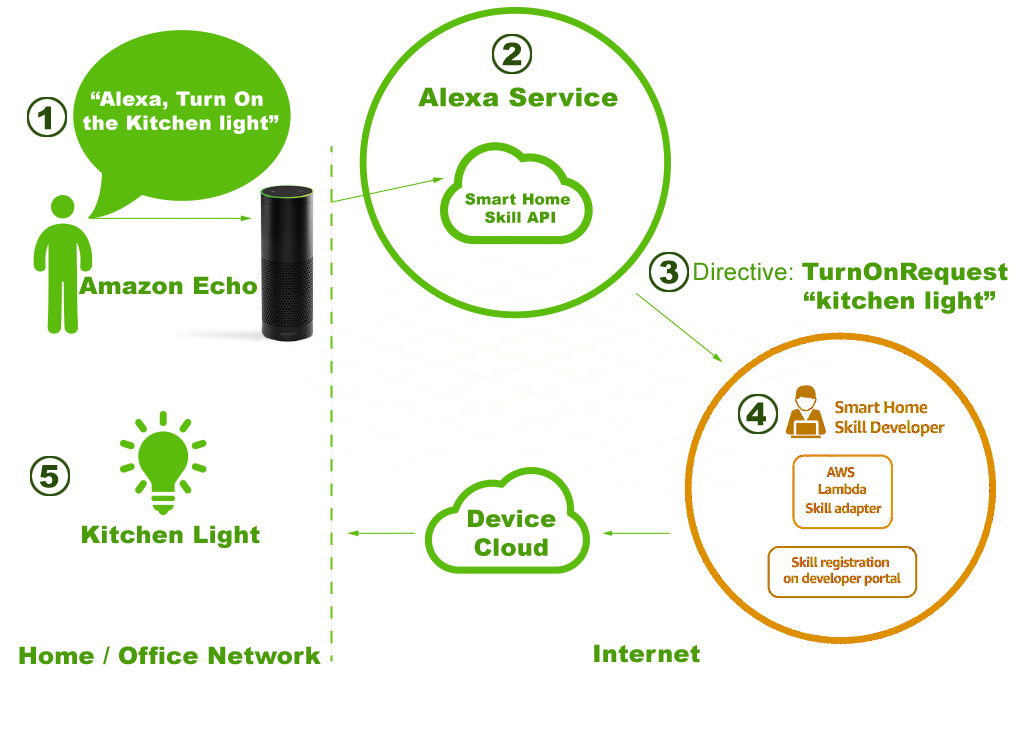

As you command something to Alexa, the inboard computer sends the command to the cloud, which is then interpreted by the Alexa Voice Service (AVS). The command is broken down by AVS and the necessary action is taken, depending on the skill type, namely whether it is an in-house skill or one developed by a third party. The actions are then relayed back to the Echo and delivered to you. All of this is done through your WiFi, in seconds, without so much as a whisper to you. The next interesting bit comes when it recognizes your voice!

Also Read: What Is Artificial Intelligence And How Is It Powering Our Lives?

How Does Alexa Recognize Your Voice? – Voice Profiles

When you command Alexa to perform a certain task, you preface your command with the wake word (‘Alexa’, ‘Amazon’, ‘Echo’ or ‘Computer’). Your voice is detected as the analog input in this instance and must be converted into a digital format for the device to understand your command and perform the necessary action.

This is where analog-to-digital converters come in, which Alexa does with its Automatic Speech Recognition (ASR). This deep-learning process enables Alexa to convert spoken sounds into words, making it the first step to enable voice-enabled assistance.

When you start using Alexa, it creates your unique voice profile in its database, which is stored in the cloud. This profile is a collection of your voice samples, which it uses to create acoustic models of the characteristics of your voice. When you command it going forward, it uses your acoustic models to compare it with the incoming voice samples and verifies if it is, in fact, you who is speaking on the other end. It uses the same process to differentiate between multiple users in the same household.

When you access a third-party app with your voice, it assigns a numeric identifier of your voice to the skill, which enables it to distinguish your voice from other users. You can personalize your settings for all the skills provided by Alexa and assign different privileges to different users.

So go ahead, create your voice profile and customize your Echo. And if you haven’t gotten your hands on one yet, you can learn more and pick one up here.

How well do you understand the article above!